With high-throughput long-read sequencing, it is now affordable and routine to produce a de novo genome assembly for microbes, plants and animals. The quality of a reference genome impacts biological interpretation and downstream utility, so it is important that researchers strive to achieve quality similar to “finished” assemblies like the human reference, GRCh38.

Until a time when sequence data and resulting assemblies can regularly achieve reference-quality, assemblies should be evaluated in the three key dimensions: contiguity, completeness, and correctness. However, the most commonly used measures of genome quality only tackle two of the three Cs.

Contiguity is often measured as contig N50, which is the length cutoff for the longest contigs that contain 50% of the total genome length. In this era of long-read genome assemblies, a contig N50 over 1 Mb is generally considered good.

Completeness is often measured using BUSCO (Benchmarking Universal Single-Copy Orthologs) scores, which look for the presence or absence of highly conserved genes in an assembly. The aim is to have the highest percentage of genes identified in your assembly, with a BUSCO complete score above 95% considered good.

Correctness, the third and final C, is more challenging to measure. Correctness can be defined as the accuracy of each base pair in the assembly and is most often measured as concordance of an assembly to a gold standard reference. Of course, when sequencing a novel species there may not be a reference against which to measure. Furthermore, concordance is only a good measure for accuracy when the gold-standard itself is very high quality and when there is little biological divergence between the reference sample and assembly sample (figure 1).

So, how does one properly measure the accuracy of a generated genome assembly? Well, we explored several methods you might find useful and broke them down by what type of orthogonal data is needed for each.

ASSESS FRAMESHIFTS

Data needed: transcript annotations

One measure of correctness is the number of frameshifting indels in coding genes. Frameshifts often disrupt the production of the protein encoded by the gene and are rare. Thus, most observed frameshifts are actually assembly errors.

This approach is similar to BUSCO, but rather than utilizing a small conserved set of genes, a larger set of genes are analyzed. This requires a set of transcripts from the same (or very closely related) sample, which are commonly generated as part of a genome annotation project. PacBio RNA sequencing, using the Iso-Seq method, is a good strategy for genome annotation.

The primary advantage of this approach is that you may be able to use an annotation or RNA sequencing data that is already in existence. The primary disadvantages of this approach are that it assesses only a small percentage of the genome (often less than 1%, often some of the most conserved regions) and may underestimate accuracy since not all frameshifts are errors.

DELVE INTO HIGH-CONFIDENCE REGIONS

Data needed: reference genome and short reads

Sometimes a reference genome is available for the same species but for a different individual than the one being assembled. In such cases, it is useful to define “high-confidence regions,” where the reference is a good match to the sample, and then assess the assembly only within those high-confidence regions. The Genome in a Bottle Consortium has applied such an approach for human samples.

To build high-confidence regions as Kingan, et al. did for human, rice, and Drosophila, short-read sequencing data is mapped to the reference and used to exclude low-confidence regions including those with abnormal coverage or in close proximity to variants. Within the resulting high-confidence regions, concordance is a good measure of assembly accuracy.

The advantages of this approach are that it provides a good measure of assembly accuracy and explicitly identifies errors as discordances between an assembly and the high-confidence regions. Discordances can then be examined to determine how to improve the assembly.

The disadvantages are that it requires an independent reference genome from which to start, as well as additional short-read data. Also, the accuracy estimate can be somewhat optimistic by excluding “difficult” regions from evaluation or somewhat pessimistic if true biological variants are not removed from the benchmark.

EXPLORE BAC COMPARISONS

Data needed: BAC sequences

In cases where a reference is not available but another set of high-quality sequences, such as bacterial artificial chromosomes (BACs), exist for the same sample, you can measure concordance between your assembled contigs and the BAC sequences. This method was used by Vollger, et al. when validating the accuracy of one of the first human assemblies generated using PacBio highly accurate long reads, known as HiFi reads.

COUNT ERRORS WITH SHORT READS

Data needed: short reads

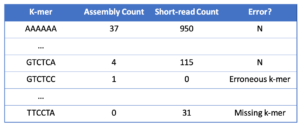

It is possible to measure accuracy even for a species with no existing reference genome by comparing the k-mers in an assembly to k-mers from short reads from the same individual. One tool to do this is yak from Heng Li. Another is merqury, developed by Arang Rhie in Adam Phillippy’s group.

The advantages of this approach are that it does not require a reference genome and does not ignore difficult regions of the assembly. It also provides a way to measure completeness by flipping the comparison and looking for k-mers present in the short reads that are missing in the assembly. Merqury has the additional ability to track the coordinates of errors: it outputs files that can be loaded as IGV tracks so the user can visualize misassembles or other errors. Merqury has many additional functions like outputting spectra-cn plots and, for users with a trio, assessing contig phasing accuracy with statistics and plots.

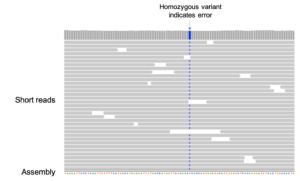

Similar to the k-mer approach above, short-read data can be used to count errors by aligning short reads to the assembly and identifying single nucleotide differences. An error rate can then be calculated by dividing the total count of SNVs by the number of bases in an assembly covered by at least three short reads. The short-read data can be from a closely related individual, although estimates of correctness are most accurate when the same individual is used as Koren, et al. did with an F1 cross of two breeds of cattle.

Like the k-mer method, this approach requires no reference genome or transcript dataset and does not ignore difficult regions of the assembly. Unlike the k-mer approach, potential errors in the assembly can be identified and characterized in order to improve the assembly method.

Looking to the future

Exciting progress in long-read sequencing and genome assembly has made it standard to produce contiguous, complete genomes. In order to generate genomes that are not simply assembled, but are also effectively used for downstream biology, we must address the third dimension of quality: correctness. The techniques discussed above make it easy to measure correctness with a variety of different orthogonal data types. We expect these approaches will identify which sequencing workflows produce the most accurate genomes and will nudge the field towards an era of reference-grade de novo assemblies.

—

Learn more about HiFi sequencing data for your organism of interest or get in touch with a PacBio scientist to scope out your project.